We have an issue with fake and manipulated content in today’s digital environment, where we spend a lot of our work, learning, and communication online. By spreading incorrect information and manipulating the media, people are purposefully misleading other people. A research group at Intel called Intel Labs has taken steps to address this problem by teaching consumers how to distinguish between authentic and deep fake material, which will help users rebuild their trust in the media.

To lead and coordinate research activities in Trusted Media, Intel Labs collaborates with Intel’s security research team and other internal divisions.

They are investigating how to integrate media provenance the origin and development of material as well as detection technology into Intel products. Additionally, they are researching how users might incorporate these new technologies into their platforms.

Deep fake detection, which refers to the production or manipulation of media using machine learning and AI, and media authentication technology, which confirms the authenticity of material, are two key study fields.

what is deep fake?

Deep fake are fake films or pictures that look incredibly authentic. Some deep fakes might look innocent, they can be exploited for crimes like identity theft and forgeries as well as propaganda. By creating algorithms and architectures that can determine whether a piece of material has been modified using AI approaches, Intel is attempting to address this issue.

Have you ever seen these videos?

01

02

03

04

05

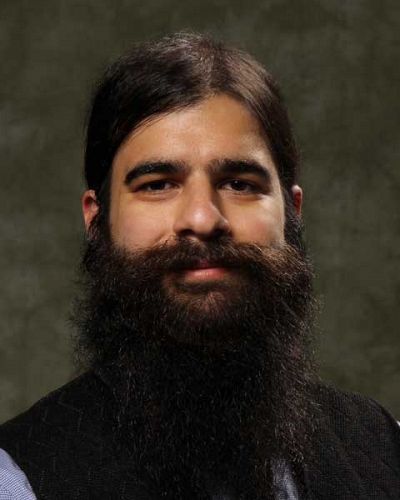

The Intel Xeon Scalable Processor already features deep fake detection technology known as FakeCatcher, which makes use of algorithms created by Professor Umur Ciftci from the State University of New York Binghamton University and Intel Research Scientist Ilke Demir.

Umur ciftci

Ilke Demir

FakeCatcher uses remote photoplethysmography methods to examine tiny variations in the “blood flow” within an image or video’s pixels. When determining if a video is authentic or false, it analyzes signals from several frames and then puts these signatures through a classifier.

photoplethysmography methods

The non-invasive method of photoplethysmography (PPG) is used to identify variations in blood volume in peripheral blood arteries. It gauges differences in light absorption brought on by variations in blood flow

Deep fake detection research should focus on identifying fake videos from real ones and learning how they are made. Convolutional neural networks and deep learning techniques are used by Intel researchers to categorize deep fake producers. They examine a variety of video characteristics, including eye and gaze movements, geometrical and visual differences, and spectral variations, to develop a signature that may be used to identify whether a video is real or phoney accurately.

In addition to Intel’s efforts, the entire industry is battling deep fake. Together with other experts from business and academia, Intel researcher Ilke Demir wrote a white paper titled “DEEP FAKERY – An Action Plan.” This essay examines the effects of deep fake and offers solutions to the problem.

The white paper “DEEP FAKERY – An Action Plan” probably examines the difficulties and dangers connected with deep fakes, emphasizing the requirement for efficient countermeasures. It could bring light to the technical facets of provenance verification, authentication, and deep fake detection. The white paper may include proposals and tactics for various stakeholders, including technology firms, academics, decision-makers, and content producers, to work together to address the deep fake problem.

Additionally, Intel is a part of The Coalition for Content Provenance and Authenticity (C2PA), which creates technical standards to validate the origin and timeline of media content in order to combat false information online.

conclusion

it has become simpler for people to produce fake and manipulated material due to the broad availability of video editing tools and AI. Intel is developing solutions that can identify fake media and restore trust in the media as a trustworthy source of genuine events by utilizing its expertise in algorithm and architectural design. They actively participate in industry initiatives, research projects, and partnerships to combat deep fake and guarantee the veracity of media material.